This comprehensive guide will walk you through the essential considerations for evaluating and selecting generative AI language models. We’ll compare leading options, analyze key features, and provide a structured framework to help you make an informed decision aligned with your unique use case.

Understanding Generative AI Language Models

Generative AI language models are sophisticated neural networks trained on vast amounts of text data. These models learn patterns, grammar, and semantics to generate human-like text based on provided prompts. The “large” in large language models (LLMs) refers to the billions of parameters these models use to generate outputs.

Modern LLMs can perform a wide range of language tasks, from answering questions and summarizing content to writing code and translating languages. Their versatility makes them valuable across industries, but also necessitates careful evaluation to find the right fit for specific applications.

Not sure which AI model is right for your project?

Get our free AI Model Comparison Cheat Sheet to quickly identify the best options for your specific use case.

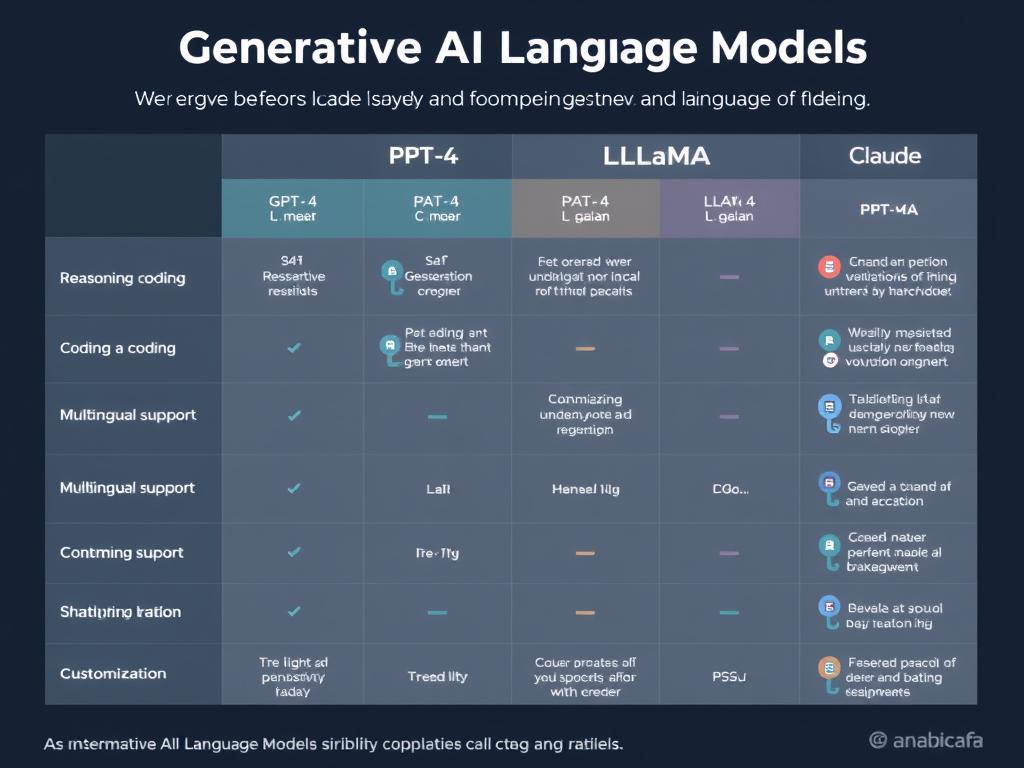

Overview of Popular Generative AI Models

GPT-4 (OpenAI)

OpenAI’s GPT-4 represents the cutting edge in commercial language models. With multimodal capabilities, it can process both text and images as input while generating text output. GPT-4 excels at complex reasoning, creative content generation, and code writing.

175B+ Parameters

PaLM 2 (Google)

Google’s Pathways Language Model 2 powers many of Google’s AI services. It demonstrates strong multilingual capabilities and excels at logical reasoning tasks. PaLM 2 shows particular strength in coding, mathematics, and translation across 100+ languages.

340B Parameters

Claude 2 (Anthropic)

Anthropic’s Claude 2 is designed with a focus on helpfulness, harmlessness, and honesty. It handles lengthy contexts (up to 100,000 tokens) and excels at nuanced instructions. Claude is particularly strong in content summarization and analysis tasks.

Constitutional AI

LLaMA 2 (Meta)

Meta’s LLaMA 2 is an open-source model available for commercial use. It comes in various sizes (7B, 13B, 70B parameters) making it adaptable to different computational constraints. LLaMA 2 is particularly valuable for organizations wanting to fine-tune models on their own data.

Multiple Sizes

Gemini (Google)

Google’s Gemini is a multimodal model designed to work across text, images, audio, and video. It comes in three sizes (Ultra, Pro, Nano) for different deployment scenarios. Gemini excels at multimodal reasoning and complex problem-solving tasks.

Scalable

Falcon (Technology Innovation Institute)

Falcon is an open-source model trained on a clean, curated dataset. Available in multiple sizes (7B, 40B, 180B parameters), it offers strong performance with permissive licensing terms that allow commercial use with minimal restrictions.

Commercial-friendly

Understanding the strengths and limitations of each model is crucial for making an informed selection. While some excel at creative writing, others may be better suited for technical documentation or multilingual applications.

Want to see these models in action?

Access our interactive demo environment to test different models with your own prompts.

Key Features to Compare When Selecting Language Models

Technical Capabilities

- Parameter Count: Larger models (100B+ parameters) generally offer more sophisticated reasoning but require more computational resources.

- Context Window: The amount of text the model can consider at once, ranging from 2,048 tokens to 100,000+ tokens.

- Training Data: Models trained on diverse, high-quality datasets typically demonstrate better performance across varied tasks.

- Inference Speed: How quickly the model generates responses, critical for real-time applications.

- Multimodal Capabilities: Ability to process and understand multiple types of input (text, images, audio).

Practical Considerations

- Deployment Options: Cloud API, on-premises, edge devices, or hybrid approaches.

- Customization: Fine-tuning capabilities, prompt engineering flexibility, and adaptation to domain-specific tasks.

- Language Support: Range of languages and dialects the model can effectively process.

- Cost Structure: Pay-per-token, subscription, or one-time licensing fees.

- Latency Requirements: Response time needs for your specific application.

Ethical and Governance Factors

- Safety Mechanisms: Built-in guardrails to prevent harmful, biased, or inappropriate outputs.

- Transparency: Documentation of training data, model architecture, and known limitations.

- Bias Mitigation: Approaches to reduce unfair biases in model outputs.

- Privacy Considerations: How user data is handled, stored, and potentially used for model improvement.

- Compliance: Alignment with industry regulations and standards relevant to your sector.

Need help evaluating models against your requirements?

Our AI Selection Worksheet helps you systematically assess each model against your specific needs.

Industry-Specific Use Cases and Model Recommendations

Content Creation

Content marketers, publishers, and creative agencies can leverage generative AI to scale content production, generate ideas, and optimize existing materials.

Recommended Models:

- GPT-4: Excels at creative writing, blog posts, and marketing copy

- Claude 2: Strong for long-form content and nuanced tone adaptation

Publishing

Customer Service

Support teams can implement AI-powered chatbots and knowledge base assistants to provide 24/7 customer assistance and reduce resolution times.

Recommended Models:

- PaLM 2: Strong multilingual support for global customer bases

- Claude 2: Excellent at understanding complex customer queries

Retail

Software Development

Development teams can accelerate coding, debugging, and documentation tasks with AI assistants that understand programming concepts and patterns.

Recommended Models:

- GPT-4: Superior code generation across multiple languages

- LLaMA 2: Can be fine-tuned on proprietary codebases

Engineering

Healthcare

Medical professionals can utilize AI for research summarization, patient communication drafting, and clinical documentation assistance.

Recommended Models:

- Med-PaLM 2: Specialized for medical knowledge

- Claude 2: Strong safety features and medical content understanding

Research

Financial Services

Financial institutions can implement AI for market analysis, report generation, regulatory compliance, and personalized client communications.

Recommended Models:

- GPT-4: Strong reasoning for complex financial concepts

- Falcon: Can be deployed on-premises for data security

Banking

Education

Educational institutions can leverage AI for curriculum development, personalized learning materials, and administrative document processing.

Recommended Models:

- PaLM 2: Excellent multilingual support for diverse student populations

- Claude 2: Strong at explaining complex concepts clearly

Academic

Looking for industry-specific implementation guides?

Access our library of detailed case studies showing how organizations in your industry have successfully implemented AI language models.

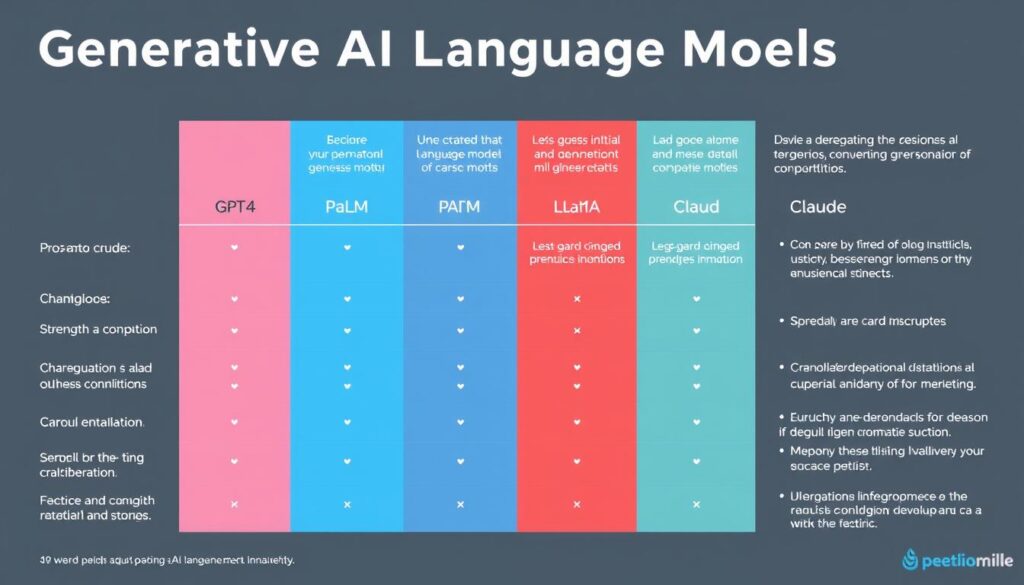

Pros and Cons Comparison of Leading Models

GPT-4 (OpenAI)

Advantages

- Superior reasoning and problem-solving capabilities

- Excellent code generation and understanding

- Strong multimodal capabilities (text + image input)

- Robust developer ecosystem and integration options

Disadvantages

- Higher cost compared to alternatives

- Limited customization options

- Potential data privacy concerns with cloud-only deployment

- Knowledge cutoff date limitations

LLaMA 2 (Meta)

Advantages

- Open-source with commercial use permissions

- Multiple model sizes for different resource constraints

- Can be deployed on-premises for data security

- Highly customizable through fine-tuning

Disadvantages

- Requires significant technical expertise to implement

- Higher computational requirements for self-hosting

- Less robust out-of-the-box safety features

- May require extensive prompt engineering

PaLM 2 (Google)

Advantages

- Exceptional multilingual capabilities

- Strong performance on reasoning and math tasks

- Seamless integration with Google Cloud ecosystem

- Regular updates and improvements

Disadvantages

- Less creative than some alternatives

- Limited customization options

- Cloud-only deployment model

- Potential vendor lock-in concerns

Claude 2 (Anthropic)

Advantages

- Extremely long context window (100K+ tokens)

- Strong safety features and alignment

- Excellent at following nuanced instructions

- Transparent about limitations

Disadvantages

- Less robust third-party integrations

- More limited API functionality

- Newer to market with less established ecosystem

- Not optimized for code generation

Need personalized model recommendations?

Schedule a free 30-minute consultation with our AI implementation experts to discuss your specific requirements.

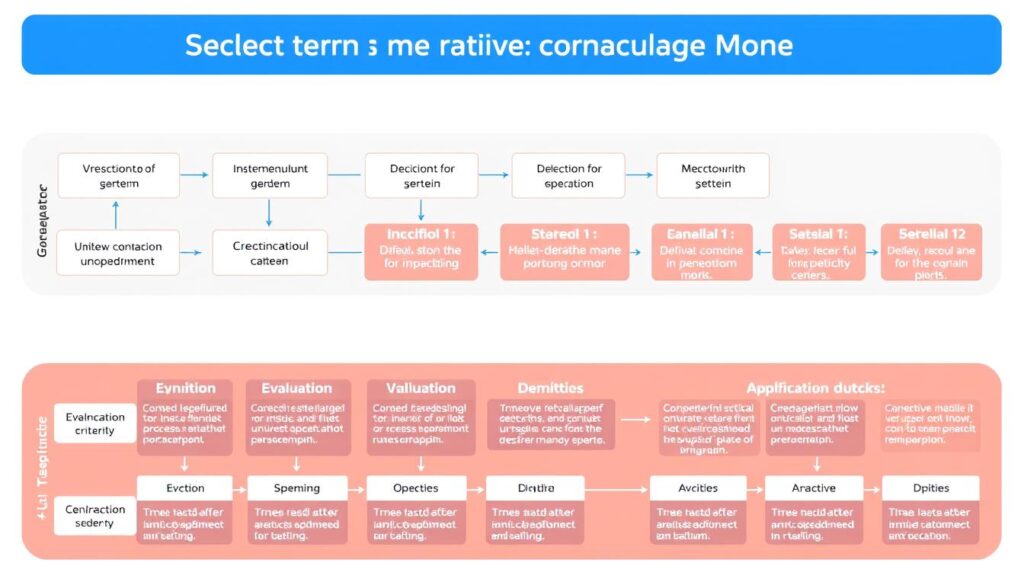

Step-by-Step Selection Framework

-

Define Your Use Case Requirements

Begin by clearly articulating what you need the language model to accomplish. Document specific tasks, expected outputs, and performance metrics.

Key Questions: What specific problems are you solving? What types of content will you generate? What volume of requests do you anticipate?

-

Establish Technical Constraints

Identify your infrastructure limitations, budget parameters, and security requirements that will influence your selection.

Key Questions: What is your deployment environment? What are your latency requirements? What is your budget per 1,000 tokens?

-

Prioritize Model Capabilities

Create a weighted scorecard of the features most important for your use case, such as reasoning ability, creativity, or factual accuracy.

Key Questions: Which capabilities are must-haves vs. nice-to-haves? Are there specific tasks where performance is critical?

-

Evaluate Data Privacy and Security

Assess each model’s data handling practices and alignment with your organization’s privacy requirements and regulatory obligations.

Key Questions: Is your data sensitive? Do you need on-premises deployment? What compliance standards must you meet?

-

Conduct Proof-of-Concept Testing

Test shortlisted models with representative prompts from your actual use case to evaluate real-world performance.

Key Questions: How does each model perform on your specific tasks? Are there quality or consistency issues?

-

Calculate Total Cost of Ownership

Look beyond per-token costs to include implementation, fine-tuning, maintenance, and scaling expenses.

Key Questions: What are the direct API costs? What engineering resources are required? What are the scaling costs?

-

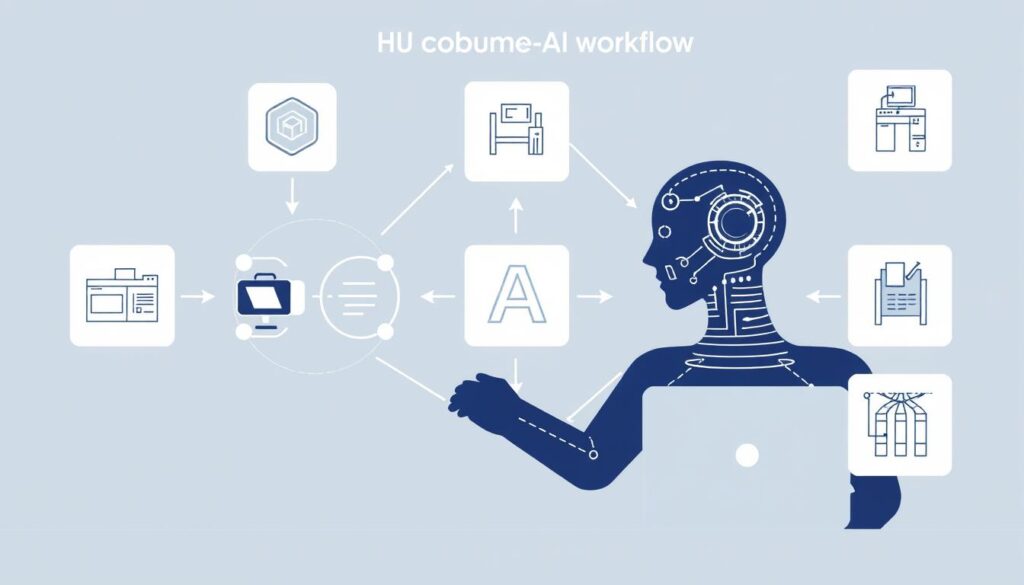

Plan for Implementation and Integration

Develop a roadmap for deploying your selected model, including API integration, prompt engineering, and monitoring systems.

Key Questions: How will you integrate the model into existing workflows? What safeguards will you implement?

Ready to make your selection?

Download our comprehensive AI Model Selection Framework with detailed worksheets, evaluation templates, and implementation guides.

Implementation Tips for Developers and Businesses

For Developers

- Implement Effective Prompt Engineering: Design robust prompt templates with clear instructions and constraints to guide model outputs.

- Build Fallback Mechanisms: Create graceful handling for cases where the model fails to generate appropriate responses.

- Implement Output Validation: Add post-processing logic to verify outputs meet quality and safety standards.

- Optimize Token Usage: Structure prompts efficiently to minimize token consumption and reduce costs.

- Set Up Monitoring: Implement systems to track performance, costs, and user feedback.

For Business Leaders

- Start with Focused Use Cases: Begin with well-defined, high-impact applications rather than broad implementations.

- Establish Clear Success Metrics: Define KPIs to measure the business impact of your AI implementation.

- Develop a Responsible AI Policy: Create guidelines for ethical AI use within your organization.

- Plan for Human-in-the-Loop: Design workflows that combine AI efficiency with human oversight.

- Invest in Team Training: Ensure your team understands how to effectively work with and prompt language models.

“The most successful AI implementations aren’t about replacing humans with automation, but about creating human-AI collaboration systems that leverage the strengths of both.”

Common Implementation Pitfalls to Avoid

- Unrealistic Expectations: Understanding model limitations and communicating them to stakeholders

- Insufficient Prompt Design: Underestimating the importance of clear, structured prompts

- Neglecting Safety Measures: Failing to implement appropriate content filters and safeguards

- Cost Surprises: Not accounting for scaling costs as usage increases

- Overlooking User Experience: Focusing on model performance at the expense of user interaction design

Making Your Final Decision

Selecting the right generative AI language model is a multifaceted decision that requires balancing technical capabilities, business requirements, and practical considerations. By following the structured approach outlined in this guide, you can navigate the complex landscape of options and identify the model best suited to your specific needs.

Remember that the field of generative AI is evolving rapidly, with new models and capabilities emerging regularly. Establishing a process for ongoing evaluation and potential migration will ensure your implementation remains effective as the technology landscape changes.

Frequently Asked Questions

How often should we reevaluate our language model selection?

Given the rapid pace of advancement in generative AI, we recommend a quarterly review of your current model against new alternatives. However, unless there are significant performance issues or new capabilities that directly address your needs, changing models more frequently than annually can create unnecessary implementation overhead.

Should we use multiple language models for different tasks?

For organizations with diverse use cases, implementing multiple specialized models can be more effective than trying to find a single model that performs adequately across all tasks. For example, you might use one model optimized for creative content and another for technical documentation.

How do we measure ROI for generative AI implementations?

Effective ROI measurement combines quantitative metrics (time saved, content produced, customer inquiries resolved) with qualitative assessments (content quality, user satisfaction). Establish baseline measurements before implementation and track changes over time to demonstrate value.

Ready to implement the right AI language model for your organization?

Our team of AI implementation specialists can help you navigate the selection process and develop a customized implementation plan.